This research proposal by Jagged Technology was initially targeted to SBIR program OSD08-IA2. We continue to seek sponsorship for the proposed work. If you are interested in teaming with us on future proposals on related computer systems topics, please contact us.

1. Introduction

Computer system virtualization, long a staple of large-scale mainframe computing platforms, has exploded into the commodity and off-the-shelf markets due to the widespread and affordable availability of software and hardware products that enable virtualization. As with any major change, the implications of the widespread deployment of virtualization are yet ill-understood; work is urgently needed to quantify the new opportunities and risks posed by specific virtualization technologies.

In this “wild west” phase of the commodity use of virtualization technology, each of the three aspects of information security (availability, confidentiality, and integrity) are by necessity being revisited by research labs around the world. For example, here is a representative sampling of three ways information security changes under virtualization:

- Availability. Virtualization enables the straightforward deployment and management of redundant and widely-distributed software components, increasing the availability of a critical computer service. (The PI has prior experience in this space.)

- Confidentiality. The use of hypervisors (a.k.a. virtual machine monitors) adds a new software protection domain to hardware platforms. This enables software workloads to run in isolation even when time-sharing the hardware with other workloads. (The PI has prior experience in this space.)

- Integrity. Any software running within this new protection domain has privileged (superuser) access to system memory and hardware resources. This access enables the creation of new software auditing tools that unobtrusively monitor software workloads in a virtual machine.

It is this latter example of software in a privileged protection domain that is of interest in this proposal. The desired outcome of this research is a quantification of an opportunity (injecting software into a virtual machine) and a risk (the detectability of such injection). Specifically, Jagged Technology proposes:

- In Phase I, we will develop a software tool that runs inside a privileged protection domain. [Footnote: This could be from a privileged VM (in what are known as Type I hypervisors), from a host OS (in Type II VMMs), or from the hypervisor itself. In the approach described later in this proposal, our tool runs in a privileged VM.] This tool will manipulate data structures in the memory of an ordinary protection domain (a virtual machine), in order to covertly load and run general-purpose software within the virtual machine.

- In Phase II, we will explore ways to detect the covert general-purpose software or to thwart the operation of our stealth-insertion tool. For example, this may include obfuscating the method by which the operating system maintains its queue of active and inactive processes, or accessing memory pages in a statistically random fashion to analyze the timing of page faults to those pages.

By covert, we mean primarily that an adversary is unable to read the contents of the memory locations containing the instructions or data used by our covert software, and secondarily that evidence in audit and system logs is minimized or removed to reduce the odds that the covert software will be detected. We assume that this adversary is able to run software with full administrative privileges (root access) inside one or more non-privileged virtual machines on the hardware platform. It may also be possible to use our approach to monitor one privileged VM from another VM, addressing the case where an adversary has administrative privileges inside the trusted computing base. However, we focus only on non-privileged virtual machines—the location where most military and commercial applications will actually be run—in this proposal.

2. Phase I objectives

We identify four base objectives for our Phase I work. The first pair of objectives involve the covert loading of software in a virtual environment:

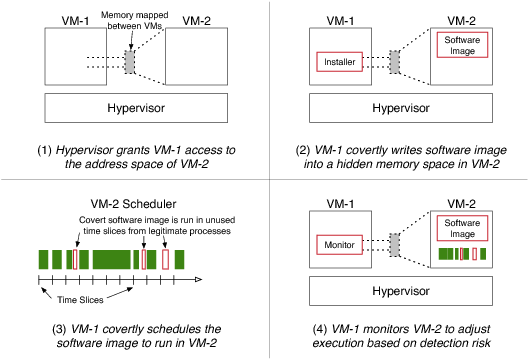

- Objective #1: Use software running in one virtual machine (VM–1) to insert executable code, including instructions and data, into another virtual machine (VM–2).

- Objective #2: Prevent software in VM–2 from being able to read or otherwise obtain the instructions and data of the inserted code.

The second pair of objectives involve the covert execution of software in a virtual environment:

- Objective #3: Use software running in VM–1 to cause the operating system in VM–2 to actually execute the inserted code.

- Objective #4: Prevent software in VM–2 from using the operating system’s audit facilities to trace or track the execution of the inserted code.

3. Phase I work plan

We propose work to build a software tool that causes a rogue process to be covertly loaded into a Xen virtual machine running the Linux operating system, and further causes that rogue process to be covertly executed. This work addresses the four Phase I technical objectives and is organized into three tasks:

- Task I: Architecture and documentation. Develop prototype algorithms for modifying the Linux OS structures and Xen page table entries to support covert insertion and execution of software into a virtual machine.

- Task II: Covert insertion (objectives #1 and #2). Create a minimal software prototype to insert code into a VM from the Domain-0 VM. [Footnote: Domain-0 is a privileged virtual machine containing the Xen command-line system management tools and also the device drivers that coordinate with the real system hardware.] Demonstrate how the memory pages used by the code are protected against unauthorized access from other software components inside the VM.

- Task III: Covert execution (objectives #3 and #4). Extend the minimal software prototype to externally cause the OS inside the VM to schedule and execute the code. Demonstrate how the OS audit facilities are bypassed or modified to hide the execution of the code.

This figure demonstrates a high-level view of the technique we will use to insert software into a virtual machine:

3.1 Developmental and experimental platform

We plan to execute this project using the Linux operating system. We will covertly insert processes into a running instance of Linux and prevent other processes (or components of the Linux kernel) from accessing the memory of our covertly inserted processes. To create our virtual environment we will use the opensource Xen hypervisor (http://xen.org) deployed on systems with processors based on the Intel x86 architecture.

We note that nothing about our approach is specific to the use of Linux or Xen. We envision future work to extend the technology developed under this program into a multi-purpose tool that works with other operating system and hypervisor combinations.

Our approach does not depend on whether the processor supports hardware-assisted virtualization. Our tool will be designed to work on platforms that do or do not contain processors with Intel Virtualization Technology or with AMD Virtualization.

3.2 Task I: Architecture and documentation

We will create an architecture document describing the method by which our tool works. This document will be intended to provide the government with knowledge of this technique that lasts beyond the length of this contract. The document will describe the functional design of our prototype tool and provide software and hardware specifications for the execution environment in which our tool runs. As our prototype will be minimal due to the limited scope of the Phase I effort, this document will describe the tasks that will be necessary to refine and extend the prototype for commercial viability and to meet the Phase II objectives.

As part of this task we will create a list of candidate countermeasures to our tool’s operation, based on our insider knowledge from the process of creating the tool. This list will highlight the most promising of these countermeasures that could form a core component of the Phase II effort.

3.3 Task II: Covert insertion

By drawing on the introspection principles demonstrated by the Livewire prototype, the XenAccess monitoring library and other security projects designed to allow one VM to monitor another VM, we will create a well-documented minimal software prototype for covert insertion of a rogue process.

This prototype will use the Xen grant table page-management interface to map and modify the contents of the VM from within Domain-0. The prototype will analyze the Linux data structures inside the VM to create a rogue process and to determine which pages are currently available for assignment to the rogue process.

In our approach we will subtly modify the page table entries for the VM—specifically, changing entries to remove the “page present” bit—to cause Xen to trap page faults into our own page-fault handler. This approach is along the lines of the method used by the Shadow Walker rootkit to hide the existence of modified pages from the operating system.

As discussed in section 1, our primary definition of covert is that that an adversary is unable to read the contents of the memory locations containing the instructions or data used by our covert software. Our approach fulfills this definition of covert: pages containing our data are accessible inside the virtual machine, but we monitor and gate which processes are allowed to read those pages by trapping of the virtual machine’s page faults. If anyone other than our rogue process attempts to read the pages, we could overwrite the page contents before completing the page fault. We could even use this technique to have our rogue process share pages with the pages used by legitimate processes, further obscuring the insertion of the rogue memory contents.

We expect that some modifications we will need to make to the OS data structures would ordinarily require full-kernel locking for safety. Locking can be a noticeable event, so we will attempt to create a tool that leverages other events in the virtual environment (such as domain scheduling) to obviate the need for explicit locking. Our prototype will first hook the Xen VM scheduler and domain-management infrastructure to passively determine at what times it is safe to make modifications to the domain’s memory (i.e., when the domain is blocked or otherwise not scheduled). We will then investigate an algorithm for making on-the-fly modifications to the VM without needing to first quiesce the VM, by monitoring which process is active inside the VM and determining which pages are in that process’ working set. If the process changes during our modifications, our prototype will have the option of immediately pausing the domain to complete its work while avoiding overt detection. [Footnote: A more detailed option, to be explored in Phase II, is monitoring which processes are currently running on which processors, and only to pause the VM when it unexpectedly enters kernel mode or otherwise performs potentially unsafe actions.]

3.4 Task III: Covert execution

By drawing on the principles demonstrated by the Xenprobes library, the Kprobes framework, and other security projects designed to allow an administrator to hijack the scheduler, debug the operating system, or probe the execution of a Linux virtual machine, we will create a well-documented minimal software prototype for covert execution of a rogue process. This could involve either inserting a new process onto the scheduler’s queue, or if possible hijacking existing processes for brief amounts of time to cause them to execute work on our behalf.

The focus of this task is both to cause the process to be executed and to do what is possible to cover the tracks of that execution by limiting the amount of resources consumed by our rogue process and by modifying the audit logs kept by the operating system to erase any entries or values indicating that our process executed. This satisfies our secondary definition of covert, in that evidence in audit and system logs is minimized or removed to reduce the odds that the covert software will be detected. As we develop the software for this task, we will explore the limit of stealth execution—identifying what evidence is visible during the actual execution of a process (for example, system status entries in the /proc file system) and determining whether this real-time evidence can be spoofed or removed.

Quantifying the many ways that a process leaves evidence of its execution has benefits both in Phase I and in Phase II, where one of the tasks will be to develop countermeasures to a tool like ours based on information available to an adversary inside the virtual machine. There are many types of evidence that may be observable by an adversary. For example, one side effect of mapping one virtual machine’s memory page in another VM is that it could change the contents of the memory caches (in essence, VM–1 could potentially preload the L2 cache with data from pages that VM–2 will soon access), which would change the timing characteristics of operations that the second VM would perform. Our continued identification of these issues will be incorporated into the architecture document from Task I.

3.5 Discussion and alternate approaches

Our approach relies on the existence of an inviolate trusted computing base (TCB) on the target system—in other words, there is a privileged part of the overall virtualized system to which the adversary cannot ordinarily gain access. For example, operating systems inside a Xen guest domain (virtual machine) cannot ordinarily access memory owned by other virtual machines unless they are specifically authorized to do so by both the administrator of the other domain and by the hypervisor itself.

Requiring a TCB does not mean that we require any sort of Trusted Computing hardware or specialized software modules in the hypervisor. Rather, it emphasizes that our focus is on using virtualization to aid our task of hiding the existence of software from the most common-case vantage point where an adversary will have access. Once we have developed the capability to externally insert a process into a running kernel, our tool could be deployed in more elaborate environments such as on specialized external hardware.

There are a panoply of alternate approaches for the covert insertion of software. Two examples include:

- An interesting aspect of certain virtualization (and virtualization detection) hacks, as well as a number of exploits, is their reliance on unmapped (or differently mapped) access to physical memory from devices. With more and more powerful devices on the bus, many of them sporting their own CPUs, an alternate type of virtualization attack involves the embedding of “undetectable” code in the firmware of these devices. This is particularly applicable to network cards with protocol offload functionality, since these would have network access and be able to snoop traffic or play man-in-the-middle, in addition to looking at host memory. Of course, with hardware access, it would always be possible read the firmware off the peripheral’s ROM. However, assuming that there is no practical way to scan the firmware from the host CPU, and assuming that reflashing the ROMrequires cooperation from the device CPU, it could be possible for the code incorporate itself into new firmware images loaded from the host. This would make it not only covert but difficult or impossible to eradicate without physical disassembly of the board.

- Another approach involves encrypting the software and executing it on a protected hardware decryption offload device (or on a specially-modified CPU). This would allow for simple storage and loading of the software while defending against its reverse-engineering by the adversary. This does raise the question of where to store or how to securely load the decryption key, especially in a large distributed environment. Assuming that Trusted Computing hardware is available on the system, one potential solution would be to have a non-encrypted seed-loader-hypervisor that verifies its own integrity—and its execution outside of a virtual environment—that obtains the decryption key from the network using peer-to-peer or other protocols.

Both of the above approaches rely on the availability of special-purpose hardware to perform independent execution, decryption, or attestation. Requiring special-purpose hardware is technically practical, thanks to products such as the IBM 4768 secure cryptographic coprocessor card, but we anticipate that the cost-efficiency of the widespread deployment and management of the hardware will keep hardware-based solutions impractical for years to come. Our solution does not require specialized hardware and thus, at the expense of not protecting against an attacker with physical hardware access, is immediately practical.

A third example combines our approach with well-known recent work:

- Inserting a virtualization layer between the existing virtualization layer and the hardware—the technique demonstrated by the recent Blue Pill and SubVirt work on multi-layer virtual machine monitors—would allow our covert software insertion tool to run at a level more privileged than all other software on the system. This would enable us to use the techniques developed in Task II to insert protection software anywhere, including in the VMM, a privileged VM, or an ordinary VM. To be effective such an approach would likely need to be predeployed on all systems to be monitored (i.e., every system either currently runs multiple layers of VMMs or is able to be rebooted to install another layer on demand), and would also likely employ techniques that attempt to hide the fact that the extra virtualization layer exists.

All currently-demonstrated technologies for running multiple layers of virtualization on an x86 system are highly specialized to the specific hypervisor technology deployed on the system. As these technologies are seemingly in a state of constant flux, we choose to focus this proposal on developing the techniques for the external insertion and scheduling of rogue software; once developed, these techniques may be placed and run anywhere, including in such an extra virtualization layer.

We note that our team has expertise in all three of these alternate areas. The technologies we develop under this program would certainly have relevance in any future research and development work that explores broader forms of software insertion and countermeasures to insertion.