Although I’ve blogged in various forms since 1996 or so, I first set up a WordPress blog in 2008. That blog was hosted on the Jagged Technology website and was intended to convey information of interest to Jagged and its customers — the idea being that if I provided a high signal-to-noise ratio of useful technical content then it might help my sales figures. Within a few days I started receiving spam comments on the blog, to which my heavy-handed solution was to disable comments altogether.

Earlier this year I set up a new WordPress blog here on my personal website, in order to have somewhere to post my aviation experiences as I experienced them. Given that I was decommissioning the Jagged website I decided to move my old posts to this site (a process that you’d think would be simple — export from a WordPress site and import into a WordPress site — but wasn’t; in the end an old-fashioned copy-and-paste between browser windows gave the best results in the shortest amount of time).

My good friend Jay asked about the conference reports:

Do you keep the notes public to force yourself to write? a form of self-promotion? or what.

Yes to all three. My primary motivation for putting the conference reports up is as an archival service to the community; there aren’t that many places you can go to learn about CCS 2009, for example, and since I write the reports anyway (for my own reference and for distribution to my colleagues) I post them in case anyone now or in the future might find them useful. Everybody has a mission in life, and mine is apparently to provide useful summary content for search engines and Internet archives.

With the new blog I decided to keep comments enabled, first out of curiosity about spam (during a visit to Georgia Tech a few years ago, one of the researchers asked why I didn’t spend more time analyzing spam instead of simply deleting it) and second on the off chance that somebody wanted to reply to one of my aviation posts with, say, suggested VFR sightseeing routes in the greater Massachusetts area.

And wow, has my curiosity about spam been piqued. I created the blog at 12:16am on February 4, 2012; the first spam message arrived at 2:16am on February 9. The second arrived at 12:42pm that day. Recognizing a trend, I hustled to enable the Akismet anti-spam plugin. Akismet works in part by crowdsourcing: If someone else on another site marks a WordPress comment as spam, and the comment later gets posted on my site, Akismet automatically marks it as spam. Since enabling the plugin sixty-eight days ago:

- Number of spam comments posted on Jagged Thoughts: 312

- Number of non-spam comments posted on Jagged Thoughts: 0

- Number of false negatives (comments mistakenly marked as non-spam by Akismet): 1

So I’m averaging 4.6 spam comments per day. That’s significantly fewer than I expected to receive, though perhaps this site hasn’t yet been spidered by enough search engines to be easily found when spam software searches for WordPress sites.

I was prompted to write this post by an order-of-magnitude improvement in spam quality in a couple of messages I received yesterday. To date, most of the spam has fit into one of these three categories:

- Do you need pharmaceuticals? We can help!

- Would you like more visitors to your site? We can help!

- Are you dissatisfied with who’s hosting your site? We can help!

Even without Akismet it is easy to identify spam simply by looking at (a) the “Website” link provided by the commenter or (b) any links included inside the comment. Such links these invariably point to an online pharmacy, or to a Facebook page with a foreign name but a profile picture of Miley Cyrus, or to a provider of virtual private servers, or to some other such site. Also almost none of the spam comments are attached to the most recent post. My theory here is that comments on older messages are less likely to be noticed by site admins but are still clearly visible to search engines. (There’s an option in WordPress to disable comments on posts more than a year old; now I understand why it’s there.)

There are spam comments about my compositional prowess:

This design is wicked! You definitely know how to keep a reader entertained. Between your wit and your videos, I was almost moved to start my own blog (well, almost…HaHa!) Great job. I really enjoyed what you had to say, and more than that, how you presented it. Too cool!

Comments that are clearly copied from elsewhere on the Internet:

In the pre-Internet age, buying a home was a long and arduous task. But the Internet of today helps the buyer to do their own preliminary work-researching neighborhoods, demographics, general price ranges, characteristics of homes in certain areas, etc. Now with a simple click, home buyers can access whole databases featuring statistics about neighborhoods and properties before they have even met the realtor.

Comments that are WordPress-oriented:

Howdy would you mind stating which blog platform you’re using? I’m going to start my own blog in the near future but I’m having a hard time deciding between BlogEngine/Wordpress/B2evolution and Drupal. The reason I ask is because your layout seems different then most blogs and I’m looking for something unique. P.S Sorry for getting off-topic but I had to ask!

Comments written in foreign languages, comments that are nothing but long lists of pharmaceutical products with links, and comments that are gibberish.

Once per week I’ve gone through and skimmed the comments marked as spam, just to make sure that I didn’t miss someone’s useful post debating, say, the merits of purchasing personal aviation insurance or always renting from flying clubs that provide insurance to their members. Over the past week I’ve received three spam comments containing information that clearly relate to the text of the post. For example, this comment on my Discovering flight post:

Absolutely but the overall senfuleuss is tied to the complexity of the simulator and cost of the simulator Airlines and places like Flight Safety use large simulators with exact replicas of the cockpits of the specific plane being simulated, mounted on hydraulic systems that provide 3 degrees of motion, and video displays for each window providing outside views. These have become so realistic that you can do most of the flying required for a type certifcate on them, and airlines use them for aircrew checkrides. Moving downward from these multimillion dollar systems, there are aircraft specific sims that have the full cockpit, but without the 3 axis motion, all the way down to the cheapest flight training devices recognized by the FAA. These are not much different than MS Flight Simulator, but have an physical replica of a radio stack, throttle, yoke and rudder pedals. You can used these type of devices to log a small portion of the instrument time required for your instrument rating. One problem common to most simulators is that they tend to be harder to hand fly than an actual airplane is, particularly the lower end sims. If you are refering to a non-FAA approved simulator, like MS Flight sim, it provides no help in learning how to handle a plane. When flying real plane the forces on the controls provide an immense amount of feed back to the pilot that is missing from a PC simulator. The other problem with a PC sim is that you can not easily look around and maintain control trying to fly a proper traffic pattern on FSX is almost impossible. A home sim can be helpfull in practicing rarely used instrument procedures, things like an NDB approach or a DME arc, but it of course it does not count to your instrument currency in any way. I have also used FSX to check out airports that I will be visiting in real life for the first time. It does an accurate enough representation of geographic features that can help you place the airport in relationship to terrain in advance of the flight.

I am thrilled that the spam software authors have started performing analytics to ensure that I receive relevant and topical spam comments! The above comment includes genuinely useful observations about using home flight simulation software to augment pilot training:

- When flying real plane the forces on the controls provide an immense amount of feed back to the pilot that is missing from a PC simulator.

- The other problem with a PC sim is that you can not easily look around and maintain control trying to fly a proper traffic pattern [] is almost impossible.

- A home sim can be helpfull in practicing rarely used [] procedures

- It does an accurate enough representation of geographic features that can help you place the airport in relationship to terrain in advance of the flight.

Early in my own flight training I tried using Microsoft Flight Simulator 2004 along with a USB flight yoke and USB foot pedals (all of which I’d bought back in 2006) to recreate my training flights at home and to squeeze in some extra practice. For the most part I found the simulator ineffective in improving basic piloting skills — as examples, the simulator did nothing to help me with memorizing the correct relationship between the airplane nose and the horizon when attempting to transition from climb to level flight at 110 knots, and it did not display useful real-world visual references as I flew traffic patterns around area airports. However, I found the simulator very useful in practicing the preflight and in-flight checklists, in memorizing which instruments were in which location on the Skyhawk’s control panel, in practicing taxi procedures around Hanscom airport given various wind conditions, and in reviewing the directions and speeds my instructor chose when we flew between KBED and KLWM airports.

Of course it’s not surprising that the comment contained insightful and critical commentary, given that it’s taken verbatim from Yahoo! Answers (Is flying with simulators help in real flight training?) What’s surprising — and exciting — is that I’ve started receiving higher quality, targeted, and relevant spam based on the topics I post! Randall Munroe would be proud. Hopefully this trend will continue and spam software will provide me with similarly-useful, carefully-selected, topically-relevant information, helping me to become a better pilot. (Note to spam software authors: Just kidding. Please don’t target this site for extra spam.)

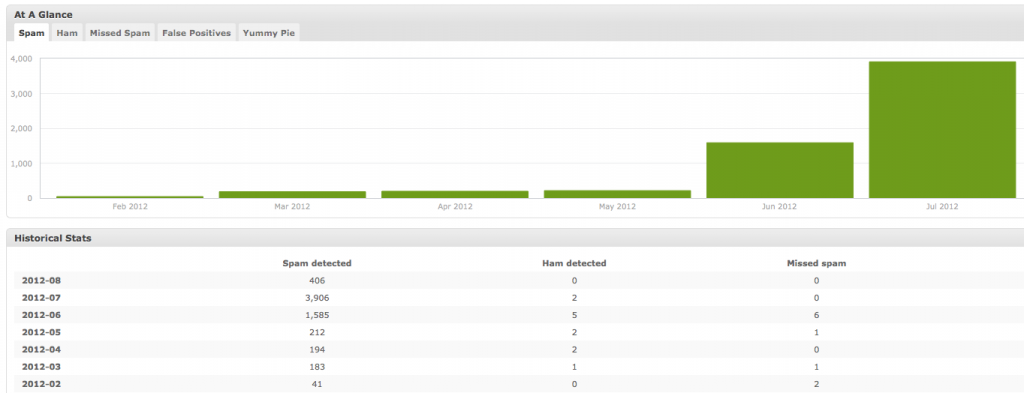

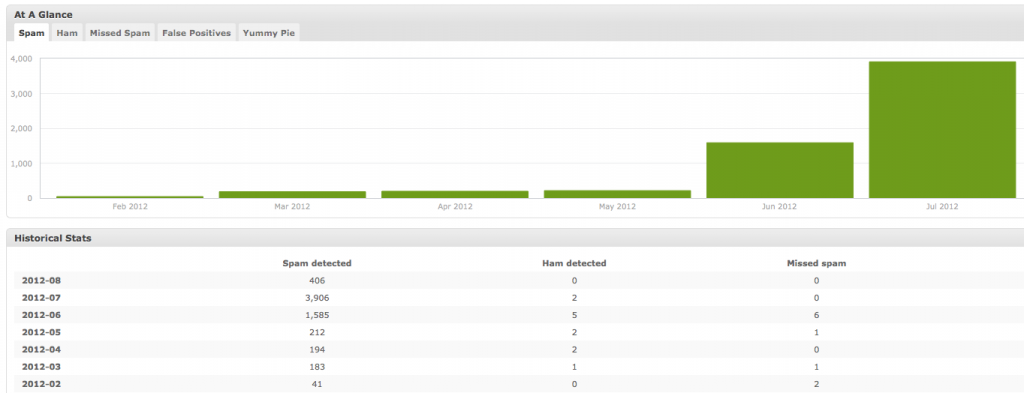

EDIT (August 2, 2012): I apologize to the spam software authors. For the past two months this article has received an exponentially increasing amount of spam, currently about 300/day:

Look, folks, I apologize. I wasn’t trying to piss you off.

I assume your motivation is economic. (I may be wrong; perhaps you’re nihilistic, anarchistic, or simply interested in chaos theory.) Spam is a lucrative business. What I’m saying is that with a few small changes it can be even more lucrative. Given the equation:

more approved comments = more planted links to your SEO and pharmacy sites = more revenue for you

Your economic goal is therefore to get more comments approved. You’ve already taken the first step of copying paragraphs of user-generated text from Wikipedia, Yahoo, and the like, instead of relying on stock phrases such as “payday loans uk”. I bet that simple change significantly increased both your approval percentage and your profit.

The next step is to be more selective in what content your bots copy-and-paste as spam. Given a blog post about buying a house, you have a greater chance of having your spam comment approved if you include real-estate-oriented (“higher quality”) text rather than, say, unrelated passages about hair loss or railway construction in China.

Beyond that, socialbots (see for example Tim Hwang’s talk I’m not a real friend, but I play one on the Internet) show promise for spammers. It’s one thing to trick an author into approving your spam comment; it would be another level of efficacy altogether to trick a site’s user community into having a comment-based conversation with your spambot.

So don’t shoot (or spam) the messenger; instead consider using my thoughts as inspiration to step up your game.