I’m almost finished with flight training for my private pilot certificate! That’s actually a little disappointing, because I’ve been enjoying the training so much; I probably won’t fly nearly this much after the lessons are over.

Two weeks ago I performed one of my two required solo cross-country flights. When I first heard about this requirement I hoped that cross-country meant a flight from Boston to Seattle (cross country) or even to Winnipeg (cross countries) but it turns out it just means, in the exciting parlance of Title 14 of the Code of Federal Regulations, Part 61.109(a)(5)(ii), simply:

one solo cross country flight of 150 nautical miles total distance, with full-stop landings at three points, and one segment of the flight consisting of a straight-line distance of more than 50 nautical miles between the takeoff and landing locations.

I’d also hoped that I would be able to pick the airports for my cross-country training flights, but that choice too is regulated, this time by the flight school. For my first flight my instructor assigned one of two approved routes, in this case KBED-KSFM-KCON-KBED — Bedford, MA, to Sanford, ME, to Concord, NH, and back to Bedford.

(Astute readers will calculate that route as only covering 143 nautical miles. Although that distance doesn’t meet the requirement above, it’s okay since the school requires students perform a minimum of two solo cross-country routes; the next one will be a 179-mile trip to Connecticut and back. I suspect the school chose those airports for the first “solo XC” because SFM and CON are both non-towered airports that are easy to find from the air — meaning good practice and confidence-building for the student — as well as because the student has flown round-trip to SFM with an instructor at least once.)

The most memorable aspect of my first solo cross-country flight was that everything happened so quickly! Without an instructor around to act as a safety net, I had an ever-present feeling that I was forgetting something or missing something:

- Oh shoot I forgot to reset the clock when I passed my last checkpoint; where exactly am I right now?

- Oh shoot I haven’t heard any calls for me on this frequency lately, did I miss a message from air traffic control to switch to a different controller’s frequency?

- Oh shoot I’ve been so busy with the checklist that it’s been over a minute since I looked outside the cockpit…is someone about to hit me? Am I about to collide with a TV tower?

There were two genuine wide-eyed moments on the flight:

- Traffic on a collision course. While flying northeast at 3,500 feet, air traffic control informed me that there was another plane, in front of me, headed towards me, at my altitude. Yikes. I hesitated while looking for the plane, until ATC notified me again, a little more urgently, that there was a plane in front of me at my altitude — except that it was a lot closer than it had been a moment before. I (a) asked ATC for a recommendation, (b) heard them recommend that I climb 500 feet, (c) did so, forthwith. Moments later I saw the plane, passing below me and just to my left — we would have missed each other, but not by much.

Lesson learned: What I should have done was immediately changed altitude and heading as soon as I got the first notification from ATC. I delayed because I didn’t comprehend the severity of the situation; it’s pretty rare for someone to be coming right at you — this was the first time it’s happened to me. Given the other pilot’s magnetic heading, that plane was flying at an altitude contrary to federal regulations, which would have been small consolation if we’d collided. (Sub-lesson learned, as my Dad taught me when learning to drive: Think of the absolutely dumbest, stupidest, most idiotic thing that the other pilot could possibly do, and prepare for him to do exactly that.) - Flight into a cloud. During the SFM-CON leg I flew (briefly and unintentionally) into a cloud. Yikes. The worst part is I didn’t even see the cloud coming; visibility was slowly deteriorating all around me, so I was focusing mostly on the weather below and to my left, trying to determine when I should turn left to get away from the deteriorating weather. All of a sudden, wham, white-out. At the time I was flying at 4,500 feet with a ceiling supposedly at 6,500 feet in that area — at least according to my pre-flight weather briefing — so I’d expected to be well clear of the clouds. (The clouds probably had been at 6,500 feet two hours before when I got the weather briefing.)

Flying into clouds without special “instrument meteorological conditions” training is (a) prohibited by the FAA and (b) a bad idea; without outside visual references to stay straight-and-level you can pretty quickly lose your spatial orientation and crash. During flight training you’re taught what to do if you unintentionally find yourself in a cloud: Turn around! is usually your best option: Check your current heading, make a gentle 180-degree turn, keep watching your instruments to make sure you’re not gaining or losing altitude or banking too steeply, exit the cloud, unclench. Fortunately, an opening quickly appeared in the cloud below me, so I immediately heaved the yoke forward and flew down out of the cloud, then continued descending to a safe altitude (safe above the ground and safe below the clouds).

Lesson learned: I should have changed my flight plan to adapt as soon as I noticed the weather start to deteriorate. First, I should have stopped climbing once I noticed that visibility was getting worse the more I climbed. Second, given that my planned route was not the most direct route to the destination airport, I should have diverted directly toward the destination (where the weather looked okay) as soon as the weather started getting worse instead of continuing to fly my planned route.

Despite these eye-opening moments, the flight went really well. My landings were superb — I am pleased to report that, now that I have over 150 landings in my logbook, I can usually land the plane pretty nicely — and once I settled into the swing of things I had time to look out the window, enjoy the view, and think about how much fun I’m having learning to fly.

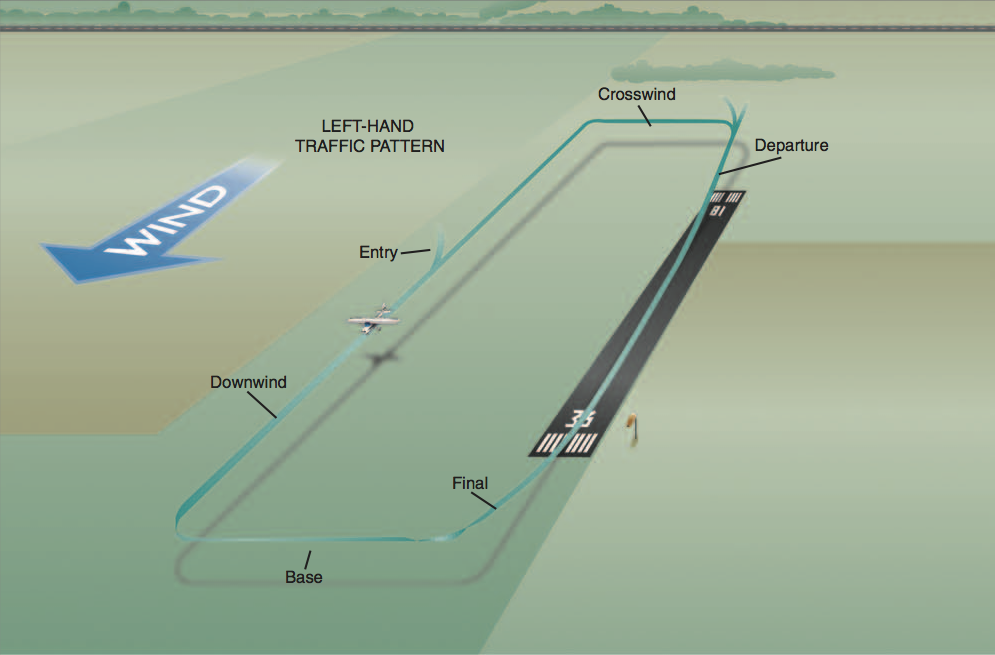

Unfortunately I haven’t yet had the opportunity to repeat the experience. I was scheduled to fly the longer cross-country flight the next weekend, but the weather didn’t cooperate. I was then scheduled to fly the longer cross-country flight the next next weekend, but the weather didn’t cooperate. So I’m hoping that next weekend (the next next next weekend, so to speak) will have cooperative weather. Once I finish the second cross-country flight I will then pass the written exam, then take “3 [or more] hours of flight training with an authorized instructor in a single-engine airplane in preparation for the practical test,” then pass the practical test. Then I’ll be a pilot! Meanwhile, whenever I fly I am working on improving the coordination of my turns (using rudder and ailerons in the right proportions), making sure to clear to the left or right before starting a turn, and remembering to execute the before landing checklist as soon as I start descending to the traffic pattern altitude.

Overall, flying is easier than I expected it to be. The most important rule always is fly the airplane. No matter what is happening — if the propeller shatters, just as lightning strikes the wing causing the electrical panel to catch fire, while simultaneously your passenger begins seizing and stops breathing — maintain control of the airplane!

- First, the propeller shattering means that you’ve lost your engine; follow the engine failure checklist. In the Cessna Skyhawk, establish a 68-knot glide speed; this will give you the most time and most distance to land. Then, look for the best place to land [you should already have a place in mind, before the emergency happens] and turn towards that place.

- Now, attend to the electrical fire. First, fly the airplane — maintain 68 knots, maintain a level heading, continue heading toward your best place to land. Meanwhile, follow the electrical fire in flight checklist by turning off the master switch to turn off power to the panel. Are you still flying the airplane? Good, now turn off all the other switches (still flying?), close the vents (fly), activate the fire extinguisher (fly), then get back to flying. (It may sound like I’m trying to be obtuse here, but that’s really the thought process you’re supposed to follow — if you don’t fly the airplane, it won’t matter in the end what you do to extinguish the fire.)

- Now, ignore your passenger. Your job is to get the airplane on the ground so that you can help or call for help. Unfortunately you don’t have a radio anymore — you lost it when you flipped the master switch — so once you’re within range of your best place to land, execute an emergency descent and get down as quickly as possible.

My new instructor often tells me that I’m flying too tensely, especially on the approach to landing — he remarks that I tighten my shoulders, look forward with intense concentration, make abrupt control movements, and maintain a death grip on the steering wheel. This tenseness is what I think of as “left-brained flying:” I am too cerebral, utilitarian, and immediate in my approach to maneuvering and in handling problems in the air; it gets the job done (I fly, I turn, I land, etc.) but doesn’t result in a very artistic (or comfortable) flight. I am working to be more of a “right-brained pilot,” reacting to the flow of events instead of to single events, making small corrections to the control surfaces and waiting to see their effect on my flight path; and in general relaxing and enjoying the flight instead of obsessing over the flight parameters.

![Figure 5 from "netmap: a novel framework for fast packet I/O" [Rizzo 2012]](http://jlg.name/blog/wp-content/uploads/2012/06/Screen-Shot-2012-06-21-at-10.33.28-AM-300x207.png)

![Table 1 from "Software Techniques for Avoiding Hardware Virtualization Exits" [Agesen 2012]](http://jlg.name/blog/wp-content/uploads/2012/06/Screen-Shot-2012-06-21-at-10.19.54-PM-300x139.png)